Magical systems thinking

Systems thinkers fail because they ignore an important fact: systems fight back.

The systems that enable modern life share a common origin. The water supply, the internet, the international supply chains bringing us cheap goods: each began life as a simple, working system. The first electric grid was no more than a handful of electric lamps hooked up to a water wheel in Godalming, England, in 1881. It then took successive decades of tinkering and iteration by thousands of very smart people to scale these systems to the advanced state we enjoy today. At no point did a single genius map out the final, finished product.

But this lineage of (mostly) working systems is easily forgotten. Instead, we prefer a more flattering story: that complex systems are deliberate creations, the product of careful analysis. And, relatedly, that by performing this analysis – now known as ‘systems thinking’ in the halls of government – we can bring unruly ones to heel. It is an optimistic perspective, casting us as the masters of our systems and our destiny.

The empirical record says otherwise, however. Our recent history is one of governments grappling with complex systems and coming off worse. In the United States, HealthCare.gov was designed to simplify access to health insurance by knitting together 36 state marketplaces and data from eight federal agencies. Its launch was paralyzed by technical failures that locked out millions of users. Australia’s disability reforms, carefully planned for over a decade and expected to save money, led to costs escalating so rapidly that they will soon exceed the pension budget. The UK’s 2014 introduction of Contracts for Difference, intended to speed the renewables rollout by giving generators a guaranteed price, overstrained the grid and is a major contributor to the 15-year queue for new connections. Systems thinking is more popular than ever; modern systems thinkers have analytical tools that their predecessors could only have dreamt of. But the systems keep kicking back.

There is a better way. A long but neglected line of thinkers going back to chemists in the nineteenth century has argued that complex systems are not our passive playthings. Despite friendly names like ‘the health system’, they demand extreme wariness. If broken, a complex system often cannot be fixed. Meanwhile, our successes, when they do come, are invariably the result of starting small. As the systems we have built slip further beyond our collective control, it is these simple working systems that offer us the best path back.

The world model

In 1970, the ‘Club of Rome’, a group of international luminaries with an interest in how the problems of the world were interrelated, invited Jay Wright Forrester to peer into the future of the global economy. An MIT expert on electrical and mechanical engineering, Forrester had cut his teeth on problems like how to keep a Second World War aircraft carrier’s radar pointed steadily at the horizon amid the heavy swell of the Pacific.

The Club of Rome asked an even more intricate question: how would social and economic forces interact in the coming decades? Where were the bottlenecks and feedback mechanisms? Could economic growth continue, or would the world enter a new phase of equilibrium or decline?

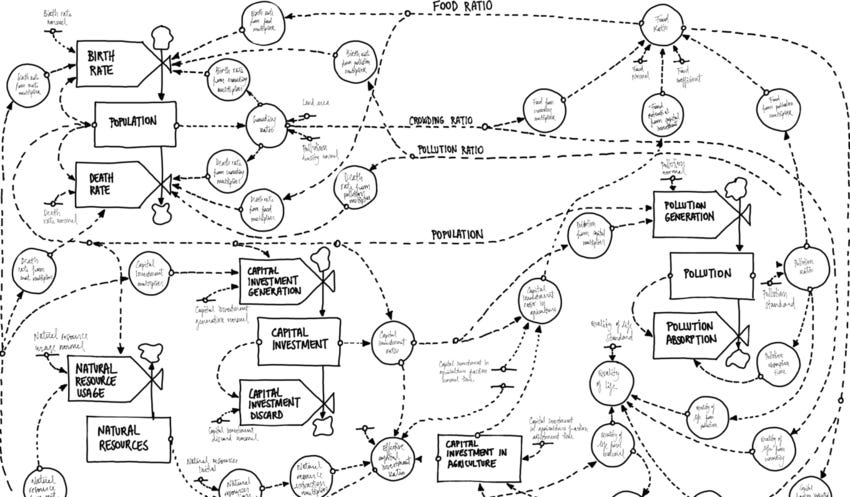

Forrester labored hard, producing a mathematical model of enormous sophistication. Across 130 pages of mathematical equations, computer graphical printout, and DYNAMO code,World Dynamics tracks the myriad relationships between natural resources, capital, population, food, and pollution: everything from the ‘capital-investment-in-agriculture-fraction adjustment time’ to the ominous ‘death-rate-from-pollution multiplier’.

World leaders had assumed that economic growth was an unalloyed good. But Forrester’s results showed the opposite. As financial and population growth continued, natural resources would be consumed at an accelerating rate, agricultural land would be paved over, and pollution would reach unmanageable levels. His model laid out dozens of scenarios and in most of them, by 2025, the world would already be in the first throes of an irreversible decline in living standards. By 2070, the crunch would be so painful that industrialized nations might regret their experiment with economic growth altogether. As Forrester put it, ‘[t]he present underdeveloped countries may be in a better condition for surviving forthcoming worldwide environmental and economic pressures than are the advanced countries.’

But, as we now know, the results were also wrong. Adjusting for inflation, world GDP is now about five times higher than it was in 1970 and continues to rise. More than 90 percent of that growth has come from Asia, Europe, and North America, but forest cover across those regions has increased, up 2.6 percent since 1990 to over 2.3 billion hectares in 2020. The death rate from air pollution has almost halved in the same period, from 185 per 100,000 in 1990 to 100 in 2021. According to the model, none of this should have been possible.

What happened? The blame cannot lie with Forrester’s competence: it’s hard to imagine a better systems pedigree than his. To read his prose today is to recognize a brilliant, thoughtful mind. Moreover, the system dynamics approach Forrester pioneered had already shown promise beyond the mechanical and electrical systems that were its original inspiration.

In 1956, the management of a General Electric refrigerator factory in Kentucky had called on Forrester’s help. They were struggling with a boom-and-bust cycle: acute shortages became gluts that left warehouses overflowing with unsold fridges. The factory based its production decisions on orders from the warehouse, which in turn got orders from distributors, who heard from retailers, who dealt with customers. Each step introduced noise and delay. Ripples in demand would be amplified into huge swings in production further up the supply chain.

Looking at the system as a whole, Forrester recognized the same feedback loops and instability that could bedevil a ship’s radar. He developed new decision rules, such as smoothing production based on longer-term sales data rather than immediate orders, and found ways to speed up the flow of information between retailers, distributors, and the factory. These changes dampened the oscillations caused by the system’s own structure, checking its worst excesses.

The Kentucky factory story showed Forrester’s skill as a systems analyst. Back at MIT, Forrester immortalized his lessons as a learning exercise (albeit with beer instead of refrigerators). In the ‘Beer Game’, now a rite of passage for students at the MIT Sloan School of Management, players take one of four different roles in the beer supply chain: retailer, wholesaler, distributor, and brewer. Each player sits at a separate table and can communicate only through order forms. As their inventory runs low, they place orders with the supplier next upstream. Orders take time to process, and shipments to arrive, and each player can see only their small part of the chain.

The objective of the Beer Game is to minimize costs by managing inventory effectively. But, as the GE factory managers had originally found, this is not so easy. Gluts and shortages arise mysteriously, without obvious logic, and small perturbations in demand get amplified up the chain by as much as 800 percent (‘the bullwhip effect’). On average, players’ total costs end up being ten times higher than the optimal solution.

With the failure of his World Model, Forrester had fallen into the same trap as his MIT students. Systems analysis works best under specific conditions: when the system is static; when you can dismantle and examine it closely; when it involves few moving parts rather than many; and when you can iterate fixes through multiple attempts. A faulty ship’s radar or a simple electronic circuit are ideal. Even a limited human element – with people’s capacity to pursue their own plans, resist change, form political blocs, and generally frustrate best-laid plans – makes things much harder. The four-part refrigerator supply chain, with the factory, warehouse, distributor and retailer all under the tight control of management, is about the upper limit of what can be understood. Beyond that, in the realm of societies, governments and economies, systems thinking becomes a liability, more likely to breed false confidence than real understanding. For these systems we need a different approach.

This is an excerpt from a new article on Works in Progress. Continue reading it here.

I had Forrester as a professor. I don't think he ever said the forecast outcome was correct. No student was taught to believe the output of the modeling technique is precise. The purpose of the models is to understand scenarios and non linear systems. It is notable the MIT students that took his courses went on to create the technology that avoided the worst case of the forecast. Which was really the purpose, to train the minds to make the world better. I believe at the time he said you could not model price signal properly, which ultimately was the conclusion by economics who rebuted Ehrlich.

Forrester also was key to the invention of radar, led the first national missile defense system in the 50s, invented bubble memory, helped start DEC computer, and demonstrated why massive public housing projects increase poverty.

Great read! And reassuring to learn that the lazy instinct to start over with something simple is actually the wise and effective thing