On Monday, we published the lead article from Issue 19 of Works in Progress, on the bad science behind expensive nuclear. We’re also hosting a Stripe Press pop-up coffee shop and bookstore on Saturday, June 28, in Washington, DC. RSVP here if you can make it.

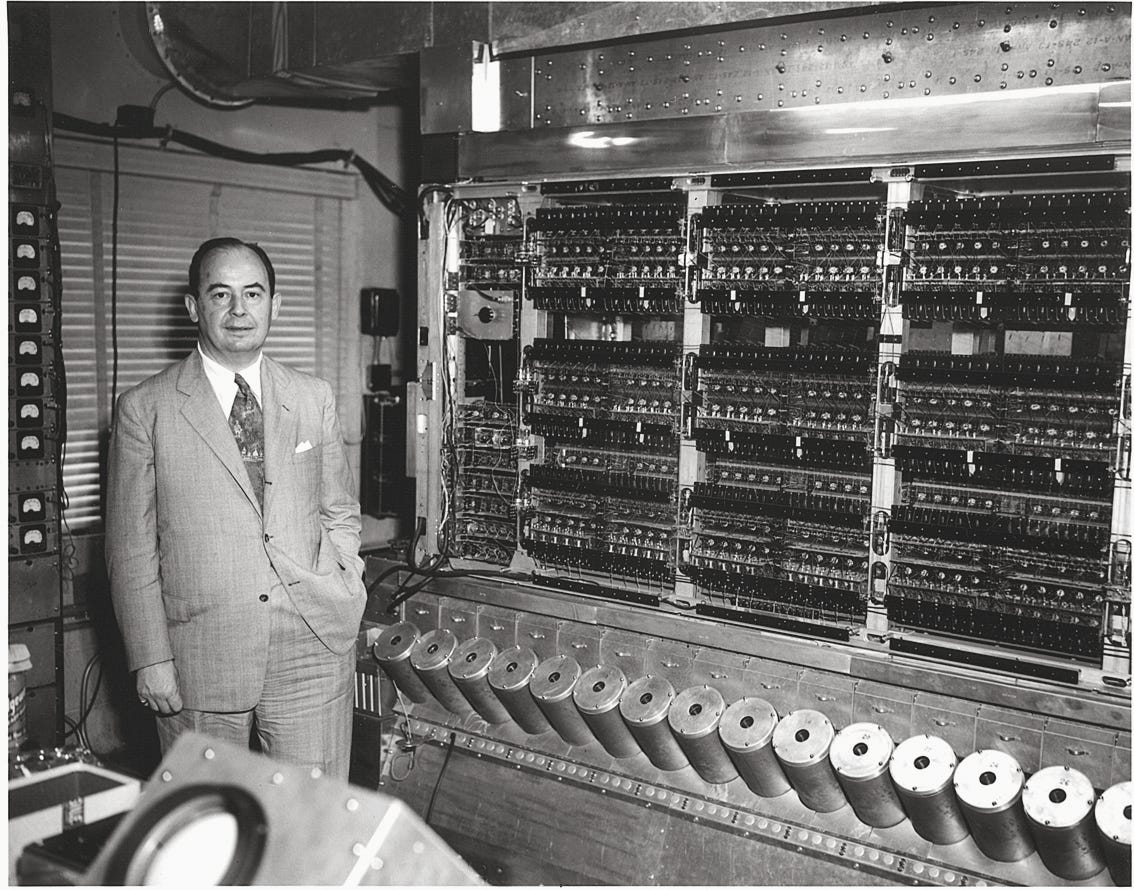

John von Neumann’s life was cut short in 1957, when he died of pancreatic cancer at the age of 53. With his passing, the world lost an outlier among prodigies. ‘Most mathematicians prove what they can. Von Neumann proves what he wants,’ quipped his colleagues at Princeton’s Institute of Advanced Study, where von Neumann had assumed a tenured professorship in 1933 at the age of 30. He was a lover of parties and limericks, a genius who proved foundational insights that spawned game theory, nuclear physics, the mathematical foundations of quantum mechanics, and many other fields besides.

Among his many interests, von Neumann had begun exploring the connection between computation and thermodynamics. The inquiry stemmed from his observation that computers, unlike most machines, seemed to generate heat without performing physical work in the conventional sense. While appliances like light bulbs and cars convert electricity into useful forms of energy, computers primarily manipulate information. The link between information processing and energy expenditure intrigued him: if computers play with abstractions rather than matter, why did they get hot?

In this, von Neumann was following a long tradition of engineers and physicists wrestling with how to conserve energy. Over a century earlier, the French physicist Sadi Carnot had demonstrated that any steam engine operating between two temperatures has a maximum possible efficiency that cannot be exceeded, regardless of its design. Von Neumann intuited that a similar limit might govern the efficiency of computers – where, instead of steam, the ‘engine’ involved the movement of information.

Though von Neumann never published these thoughts, his questions echoed in the minds of those who’d worked in his orbit. One such person was Rolf Landauer, a physicist at IBM. Like Von Neumann, Landauer was a Jewish refugee, and had fled Nazi persecution in Germany as a teenager in 1938. After earning his PhD from Harvard, Landauer joined IBM’s research division, where he began to study the thermodynamic costs of computation.

In 1961, Landauer published a paper that formalized what would become known as Landauer's principle. This established that erasing information necessarily releases heat. To understand why this happens, we need to consider what computing means from the perspective of information. A typical chip is made up of multiple logic gates, which perform operations by producing outputs based on inputs. Most logic gates have fewer possible output states than input states. For example, a gate with two inputs (each either 0 or 1) has four possible input combinations (00, 01, 10, 11), but only two possible output states (0 or 1).

What’s more, this operation involves erasing information because you can’t know for sure which inputs yielded the outputs you can see. Imagine calculating 2+2. Once arrived at the answer 4, it is no longer possible to distinguish between that calculation and inputting 3+1, 2+2, or 4-0.

This reduction in possible states affects something called entropy. It’s a complex and contested term, but one way to think about it is as a measure of the number of ways a system can be arranged. Higher entropy means more possible arrangements; lower entropy means fewer. An easy way to illustrate this is with a deck of cards. A new deck arranged in ascending order by suit and number has low entropy, since there’s only one way to sort it; but a shuffled deck has higher entropy, since there are so many ways it could end up. In computing, this is why logical operations lower the system’s entropy. They reduce four possible input states to two possible output states.

But according to the second law of thermodynamics, a system’s total entropy can never decrease. This means that ‘lost’ entropy must be compensated for by generating heat. It can be thought of as nature’s version of double-entry bookkeeping – any reduction in a system’s information content is balanced by an increase in thermal energy. Landauer figured out that every time a computer resets a bit of memory from 1 to 0 the minimum heat dissipated is about 3 × 10^-21 joules at room temperature – roughly equivalent to the energy released when two molecules collide with one another.

Landauer had transformed an intuition about the physics of computation into a precise and measurable principle. But his solution presented a conundrum. If erasing information always costs energy, then perhaps computation without destruction could proceed with a negligible energy cost. However, for lossless processing, memory would need to grow continually, creating an impractical accumulation of stored states with each calculation – a kind of computational junkyard of past operations.

Enter Charles Bennett – a physicist also working at IBM Research. In the early 1970s, he had an important insight: what if, after completing a computation and recording the result, the computer could run the entire process backward, returning to its initial state? He set out his thinking in a 1973 paper, ‘Logical Reversibility of Computation,’ which became the foundation for a series of papers he would publish over the next decade and beyond. Bennett’s approach was like a hiker who retraces their steps, removing all evidence of their passage through the wilderness. The computation could proceed forward, produce a result, make a copy of the answer, and then ‘decompute’ the whole trace by reversing itself – recycling the energy rather than releasing it as heat.

Consider this in terms of addition. In conventional computing, when we calculate 3 + 5 = 8, the information about which numbers were added is typically discarded once the sum is obtained. In Bennett's reversible paradigm, the computation would temporarily retain enough information to work backward from 8 to recover the original inputs 3 and 5.

By preserving this information, the system avoids the thermodynamic costs associated with erasing data. Bennett offered a way around the limitations that Landauer had identified, and demonstrated that reversible computing was theoretically possible.

In the early 1980s, two MIT researchers, Tommaso Toffoli and Ed Fredkin, would conceptualize so-called reversible logic gates – the fundamental building blocks for reversible computers. Fredkin was born to Russian immigrants in Los Angeles and had dropped out of Caltech to train as a fighter pilot. In 1962 he founded one of the first computing companies, Information International, Inc. Commonly known as ‘Triple I’ or ‘III’, it was an inventive place, developing machines for transferring digital images to film, scanning words on a page, and generating computer graphics. When III went public, Fredkin became a millionaire, and bought himself a private island in the Caribbean. At the age of 34, he was recruited by Marvin Minsky to join MIT as a full professor, despite possessing no formal degree.

Toffoli and Fredkin’s gates had the remarkable property that their outputs uniquely determined their inputs, which meant no information was ever lost. They achieved this by having the same number of output bits as input bits, maintaining a one-to-one correspondence between input and output states. These gates represented a crucial step from theory to reality. While Bennett had proved that reversible computing was theoretically possible, Fredkin and Toffoli showed how it could be built.

Around the same time as Toffoli and Fredkin were devising their reversible logic gates, the physicist Richard Feynman began cultivating his own ideas about the potential of reversible computation. Feynman had crossed paths with von Neumann on the Manhattan project, and had become a legendary figure in his own right. He was also close friends with Fredkin, and served as best man at Fredkin’s (second) wedding in 1980 in the Virgin Islands. Lately, Feynman had also become fascinated by the question of whether computers could efficiently simulate quantum physics.

Quantum mechanics is built on reversible laws, meaning that at the microscopic level, the equations governing subatomic particles work the same whether time moves forward or backward. In everyday life, though, we only see time flowing in one direction. A dropped glass shatters, but it never jumps back together. This is because the macroscopic world is dominated by entropy, which gives time its arrow. But underneath it all, nature does not actually distinguish between past and future. Reversible computing is not just a clever engineering trick; it may be tapping into the same fundamental logic that governs reality itself. It feels strange, but only because the universe is far stranger than how we experience it through our senses.

By the mid-1980s, Feynman had integrated these ideas into his vision for the future of computing.

‘The laws of quantum mechanics are reversible,’ Feynman explained at a lecture delivered in Tokyo, ‘and therefore we must use the invention of reversible gates.’ Acknowledging the work of Charles Bennett, Feynman compared a standard computer to a car ‘which has to start and stop by turning on the engine and putting on the brakes, turning on the engine and putting on the brakes; each time you lose power.’ The reversible alternative would be like connecting the car’s wheels to flywheels – storing energy to be used by the system, rather than wasting it.

Feynman recognized that reversible computing represented an insight into the nature of computation, even if its full implementation would be impossible for a long time.

Unfortunately, reversible computing proved to be the victim of historical irony. Quantum computing, an outgrowth of the search for reversible computing, would end up distracting from and overshadowing its originator.

The quantum approach dazzled funders with its properties – the ability to exist in multiple states simultaneously through superposition, to exhibit spooky action-at-a-distance via entanglement, and to promise exponential speedups on problems like factoring large numbers. And as quantum computing grew in glamour and status, it cannibalized the field that had spawned it. Reversible computing became unfashionable, while quantum research drew billions in investment.

Outside academia, mainstream computing forged ahead. In 1965, Gordon Moore, the co-founder of Intel, had observed that the number of transistors on integrated circuits, and the corresponding computing power, was doubling approximately every two years – a predictive heuristic that became known as Moore’s Law.

Intel was at the forefront of this boom. At their peak, Intel released new products roughly every six months, with double the performance or half the price of their predecessors. Yet this breakneck pace imposed a certain cost on the computing industry. Intel’s architecture wasn’t intrinsically superior as the foundation for mass computation; after all, it was based on a transistor originally developed by Bell Labs to boost long-distance telephone signals, and was later adapted for computing because it could perform Boolean logic operations.

The pursuit of market dominance made it nearly impossible for alternative frameworks like reversible computing to gain a foothold – even inside Intel itself, where internal teams continued to tinker and experiment with different approaches. But the established infrastructure, supply chains, and continuous stream of improvements in conventional computing created momentum that was difficult to overcome.

To be fair, Intel’s strategy worked spectacularly well for decades. It had its share of competition: Atari was built with Motorola processors, and the iPhone ran and continues to run on chips designed by a British company known as ARM. But it was Intel that catalyzed the PC, the World Wide Web, the personal computing revolution.

Yet as the years roll on, physical limitations are emerging. By the early 2000s, Dennard scaling, which had allowed transistors on chips to become smaller without a corresponding increase in power, broke down. Suddenly, chips hit what the industry called a ‘power wall’: they couldn’t get faster simply by shrinking their transistors, because they’d have ended up melting from the heat. The risks posed by heat, rather than our ability to design faster circuits, became the primary constraint on performance. The industry shifted towards multi-core processors, but the fundamental problem remains: conventional computing is approaching its thermodynamic limit.

The energy required to power AI compute in particular is astronomical – in large part because so much of it is frittered away as heat. This demand has emerged precisely as Moore’s Law is faltering. We find ourselves needing exponential increases in computation, yet our current architecture can’t deliver it without needing large increases in our energy supply.

The bet on reversible computing is what my team and I at my company Vaire Computing are hoping to redeem. We are working on what we call Near-Zero Energy Chips. Some say reversible computing is a 21st-century technology that was discovered by accident in the 20th century. We are not there yet. While Vaire’s chips can recycle energy, the current challenge is that the reversible logic – the fundamental circuitry – is more complex, which offsets some of the energy savings. When we manage to reduce these overheads, NZECs will start to make commercial sense.

Could we one day process information at energy levels below the Landauer limit? It might sound like a violation of physics, but it is not. Landauer’s limit only applies when information is erased. In reversible computing, nothing is lost, so the limit does not apply in the same way. The real challenge is to build systems that preserve information throughout the computation while remaining practical and efficient.

If we could design computers from first principles with humanity’s long-term needs in mind, we probably would not have built the machines we rely on today. But the path ahead may still lead to the destination that von Neumann glimpsed: computers that process information without waste, and where energy costs are no longer a barrier to progress.

Rodolfo Rosini is the CEO of Vaire Computing.

A shuffled deck, after shuffled, has only one state, the specific ordering of the cards it contains. Maybe an example with a deck with glued cards vs one with loose cards would make more sense ? Or is the number of possible end states of shuffling an ordered deck is lower than a random ordered one? Debatable if you need to assumed a perfect shuffling process … (unless, off course, I am missing something in understanding about entropy)

Nice survey. Thanks!