Open Philanthropy is hiring up to four new team members for its Abundance and Growth Fund, led by Matt Clancy. They are seeking 1–2 generalists and 1–3 specialists with expertise in housing policy, energy, infrastructure, state capacity, healthcare, economic dynamism, high-skilled immigration, or building abundance/progress studies communities. Hires for these roles will help expand this new $120+ million program to accelerate economic growth and reduce the cost of living through strategic grant making and research. Apply by July 27 for full consideration. Know someone who might be a good fit? Earn $5k if your referral results in a hire.

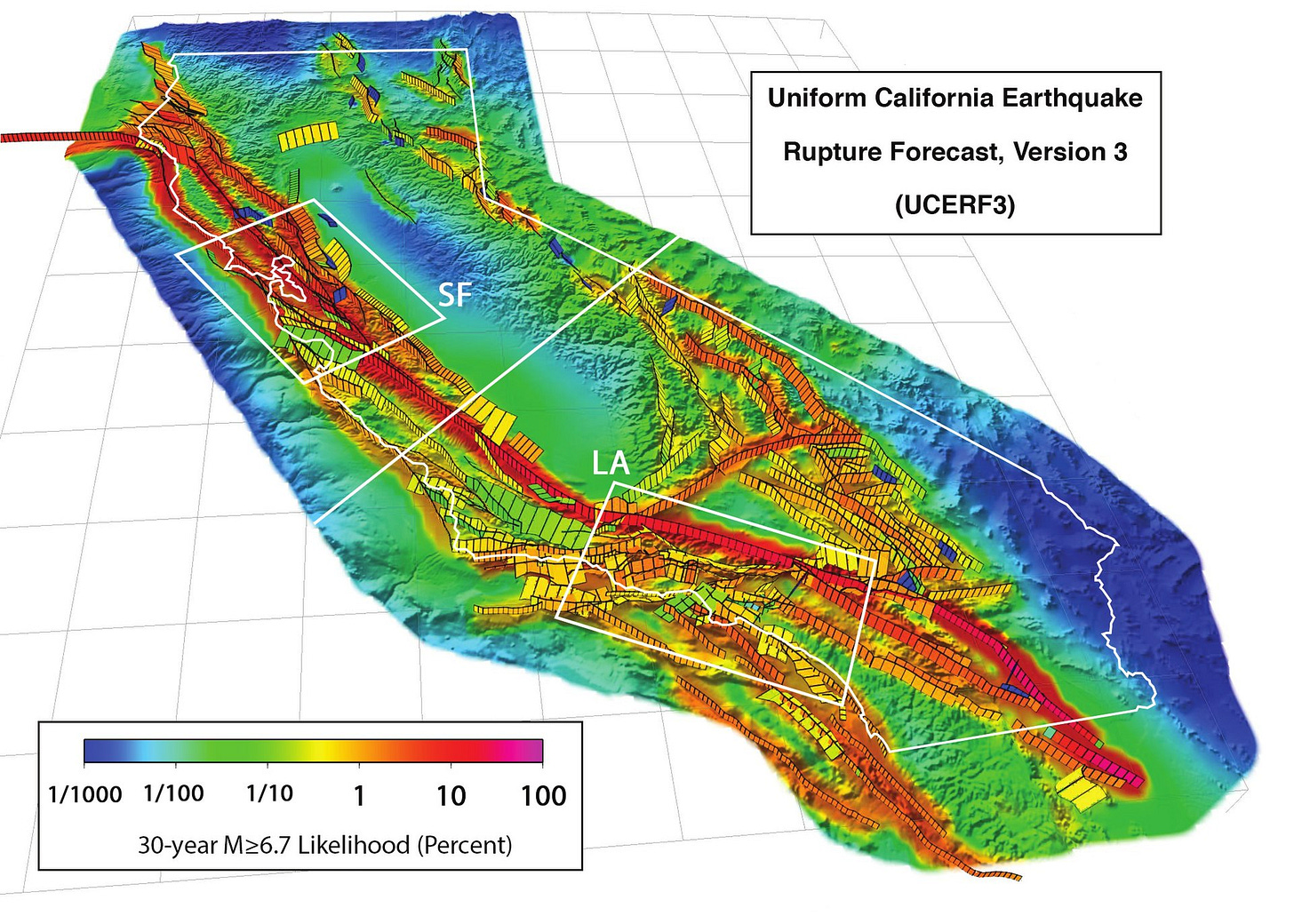

Over the next century, one or both of two faults – the Cascadia and San Andreas – have a nearly 70 percent chance of unleashing the energy equivalent of anywhere from 30,000 to 60,000 Hiroshima bombs onto the American West Coast.

The last time either of these faults had a major rupture (1700 for Cascadia, 1906 for northern San Andreas, and 1857 for southern San Andreas), the high-impact zones had about 550,000 residents in total. Today, approximately 35 million people live in the same regions. (The Hayward fault, which runs directly through the Bay Area and is overdue for a rupture, is also extremely dangerous, but for the purposes of our discussion we’ll focus on just the largest two faults).

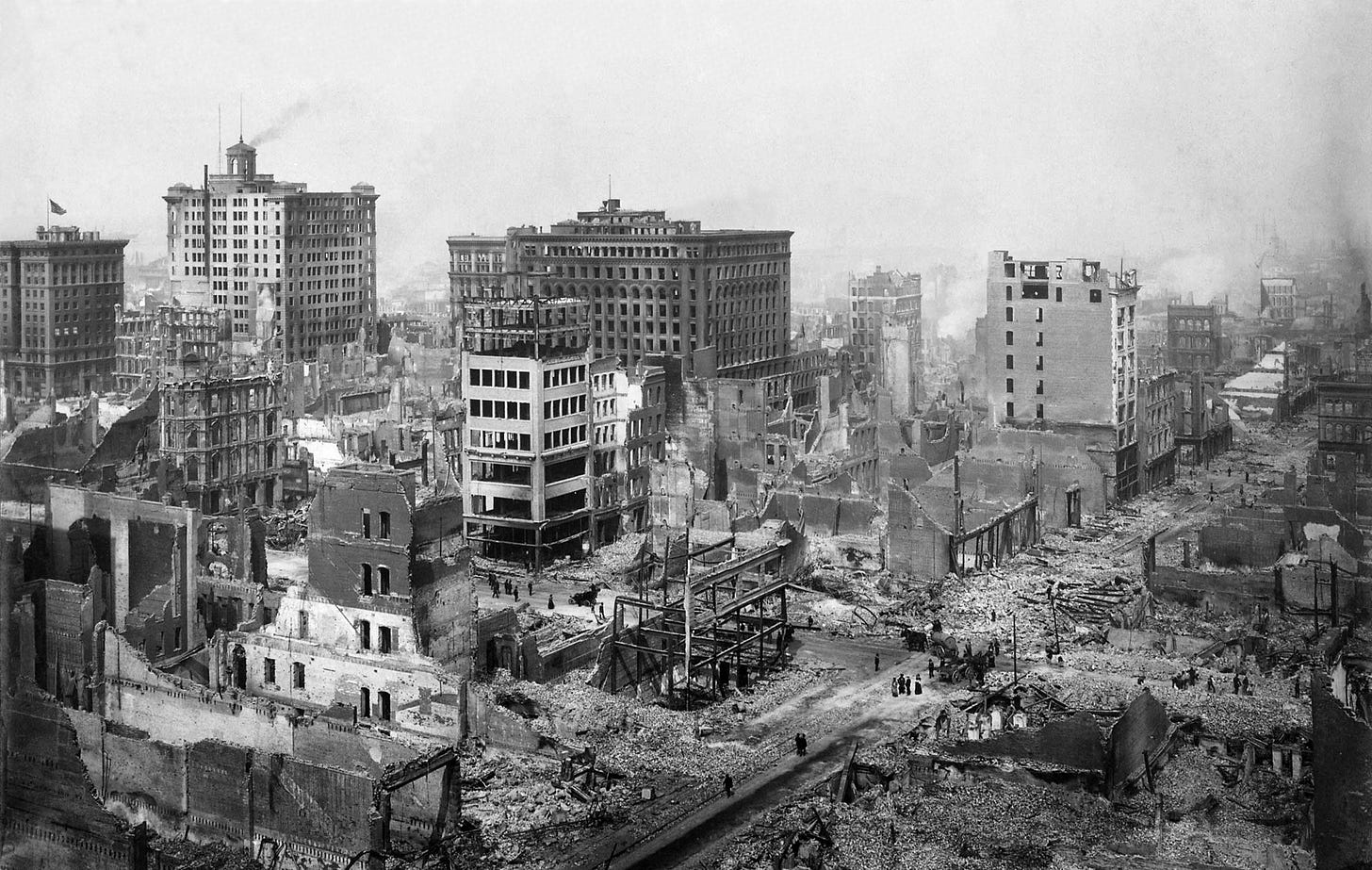

The most destructive of these historical ruptures, the 1906 San Francisco earthquake, destroyed half the buildings in the city and killed over 3,000 people. Despite major advances in building codes and hundreds of millions of dollars spent on regulation and new building methods, a vast portion of the built environment remains highly vulnerable. In high-impact zones on the American West Coast, up to 20 percent of buildings are expected to fail in a Cascadia or San Andreas rupture. The remainder are only built to survive a smaller quake, with unclear outcomes given the ultra-large quakes that both faults are rated for.

If either fault were to rupture, it would be the single-worst natural disaster in American history. It would kill anything from 5,000 to 100,000 people, cause over $500 billion in property damage, and destroy some of the country’s most important infrastructure. Combined, these faults pose one of the biggest threats to American well-being and prosperity in the twenty-first century. And yet, efforts to mitigate this risk remain alarmingly limited.

Unlike natural disasters like hurricanes, floods, and tornadoes – which, with some level of accuracy, we are now able to predict – earthquakes seem completely opaque to us. This means that they appear out of the realm of prediction, and by extension the realm of concern – at least for most residents and elected leaders.

As a result, funding for earthquake prediction is close to nonexistent. Science funding is generally risk averse, but in the past 30 years the Federal government has spent approximately $10 million per year on prediction research (broadly defined) – around 0.5 percent of annual earth science spending. Even then, most of the earthquake prediction budget is spent on probabilistic long-term forecasting, fault modeling, and data capture and integration. This helps identify where earthquakes can happen, but not when an earthquake will happen.

Until recently, even with unlimited funding, genuine pathways to the ‘holy grail’ breakthrough have been limited. This grail would be minutes or hours of advance warning, since even just stepping outside would prevent the majority of fatalities and injuries. The reason is simple: earthquakes are complex and difficult-to-observe systems. Scientists still have a relatively primitive understanding of the state and movement of each tectonic plate, and even a robust understanding would be computationally difficult to fully model. Data points are few and far between, often only capturing a limited part of a full-cycle event.

Current approaches to understanding chaotic systems generally involve manually weighing different data points and deciding how they should be factored into a prediction model. This becomes impossible as data sources scale, but foundation models like Aurora have recently proven to be exceptionally good at understanding and predicting events in chaotic systems. Foundation models, if properly deployed, give us the strongest potential path to 10 minutes or more of advanced warning, but the application of machine learning in earthquake prediction remains limited.

To effectively deploy foundation models for earthquake prediction, a few things need to be true.

First, sensing technology, reach, and infrastructure needs to be an order of magnitude better. There are remarkably few seismometers: California only has about a thousand, roughly one for every 15 miles of fault line. There are even fewer in underwater faults like Cascadia. But more critically, many richer sources of earth movement data, including InSAR, a satellite-based way to capture ground deformation, and distributed acoustic sensing, which uses unused or underutilized fiber optic cable lines from telecom companies to sense movement to the nanometer, are either underutilized or not captured at all.

Second, all the data needs to be in one place. Data from different labs and regions, even when nearby or funded by the same source, is rarely aggregated and properly structured and labeled.

And third, someone needs to convince data providers to share data, install more sensors in relevant places, aggregate the data, and build, run, fine-tune, and fund the model. That is: the area needs more talent and compute, which means that the area needs more funding.

Each of these components is feasible and all are being accomplished on a small scale by individual labs, but with limited coordination or data orchestration and nowhere near enough money to give us advanced warning by the time the next big earthquake comes. Despite the time-sensitivity and potential impact to the well-being and prosperity of millions of Americans, there is no Manhattan Project-level sprint toward a breakthrough.

There are two realistic paths forward.

The first is an open-source project, where individuals and organizations can pool data, compute, and capital to test individual hypotheses. This will make progress, albeit slowly.

The second, and likely the most effective, is a centralized lab. This lab would provide targeted grants to researchers and universities to explore novel sensing, data capture, and model construction methods, including funding for the expansion of existing sensing methods like distributed acoustic sensing and ocean-bottom seismometer. It would also aggregate, structure, and label both historical data and existing data sources and feeds. Finally, it would build and fine-tune a foundation model while making all data freely available to other labs for analysis and research. With great leadership, collaborative partnerships with existing institutions, and $25–50 million of funding, a centralized lab has a reasonable chance of achieving a major breakthrough in three to five years.

A catastrophic seismic event cannot be stopped. But it can likely be predicted, and for the first time, the technology exists to make real progress toward a ‘holy grail’. Few challenges are as well-matched for foundation models as this one, and we have a rare window to predict disaster before it strikes. Let’s not waste it.

Many thanks to Kelian Dascher-Cousineau, Emily Brodsky, Tom Kalil, Neiman Mathew, and Ben Southwood.

Michael Ioffe is the CEO of Arist. You can follow him here.

Cool article! It's not common that interesting meets urgent — many EA cause areas are in "boring but effective" topics like mosquito nets, but this combines stimulating engineering with compelling impact. It ought to be seriously considered by funders as a cause area

Yikes! Between Yellowstone and the California faults, the west is sitting on a timebomb. Thanks for this interesting and somewhat terrifying article!